Most of you may have heard of or even used AI-text summarizing apps or tools, right? They condense lengthy text pieces such as blogs, research papers, and essays, into concise summaries while keeping the original quality and meaning unchanged.

These apps are widely used by students, teachers, writers, researchers, and even marketers globally. So, being a developer have you ever wondered how such apps can be created? If you have, then this detailed guide is especially for you.

In this blog, we are going to explain a step-by-step procedure through which developers can create a

Skills Required for the Text Summarizer Development

Before we go towards the steps, we should first understand what sort of skills you need to possess to complete the model development.

NLP knowledge: A branch of artificial intelligence that enables machines or tools to effectively comprehend both the language and context of input text.

Python: It is high-level programming that is widely used for the creation of websites, online tools, and platforms.

Deep Learning Frameworks: You must have a strong understanding of specific deep learning frameworks such as TensorFlow, Pytorch, and more.

Datasets: These contain several metrics and information for quick loading and preprocessing of datasets.

Step-by-Step Procedure for Using an AI Text Summarizing Tool

Below are the steps that you need to follow to develop a text summarizing model that is backed by AI technologies.

1.Decide the Working Approach of the Summarization Model:

First of all, you need to decide about the working approach of the summarization model. There are two options to choose from:

- Abstractive approach: In this type, your created model will generate a summary by using new words and phrases that do not appear in the source text.

- Extractive approach: This has the opposite working; it works by creating a summary by using the same wording as the input text.

It is important to note that both these require a different development approach. In this guide, we will be building a summarization model working on an abstractive approach.

2.Download and Import Libraries:

From here, the process of development will take off. You need to download and import essential libraries discussed below.

Transformers: These provide numerous pre-trained models i.e., BART for effectively fine-tuning the text summarization model.

NLTK: Natural language toolkit that facilitates different tasks such as tokenization, stop removal, and more tasks.

FastAPI: It is a framework that will be used for building REST APIs for effectively deploying the summarization model.

ROUGE Score: This one is used to determine the quality of summaries generated by the app.

You are required to download, install, and import all these libraries. For installation, this command will be used:

| pip install transformers datasets nltk fastapi |

Whereas, for importing, the “Import” command will be used.

3.Data Collection

Remember, the foundation of any AI-powered summarization model lies in data collection. The more datasets it will be trained on, the more perfect results it can generate.

So, devote significant time and effort to collecting a bunch of data from internet resources such as blogs, journals, scientific papers, social media posts, and more. There are also pre-made datasets available online. One of the most popular ones is the CNN/Daily Mail dataset.

We have downloaded this dataset in a CSV file for each handling and importing. You are expected to do the same as well.

4.Import & Preprocess the Data:

Now, it is time to import all the collected data. The code that will be used is below:

| from datasets import load_dataset

dataset = load_dataset(“cnn_dailymail”) |

When you are done with importing, then comes data preprocessing. This process involves different tasks such as tokenization where the text is divided into small chunks of words and sub-words.

The process also includes “Stop word” removal in which commonly used words and phrases are removed from the collected data without damaging the original meaning. Below, we have mentioned the Python code that can be used for seamless data preprocessing.

| import nltk

from nltk.tokenize import word_tokenize from nltk.corpus import stopwords # Download necessary NLTK data nltk.download(‘punkt’) nltk.download(‘stopwords’) # Sample text text = “Input your text here.” # Tokenization tokens = word_tokenize(text) # Remove stopwords stop_words = set(stopwords.words(“english”)) filtered_tokens = [word for word in tokens if word.lower() not in stop_words] # Lemmatization lemmatizer = WordNetLemmatizer() lemmatized_tokens = [lemmatizer.lemmatize(word) for word in filtered_tokens] print(“Processed Tokens:”, lemmatized_tokens) |

Preprocessing the collected data will ensure you now have a text that is perfect for model training.

5.Model Architecture:

The model plays a crucial role in making your text summarization model work effectively. There are several models available; a good and popular option is transformers. Their specific models such as BERT and BART are too good at handling long-range dependencies.

These can be easily pre-trained on massive corpora and fine-tuned for numerous text summarization tasks. We are going to use the BART model; its implementation is easy. You just need to import “Pipeline” which contains all the pre-trained text summarization from transformers.

Below we have mentioned its simplified code that you can use.

| from transformers import pipeline

# Load summarization pipeline summarizer = pipeline(“summarization”, model=”facebook/bart-large-cnn”) # Input text text = “””Input your text here.””” # Generate summary summary = summarizer(text, max_length=50, min_length=25, do_sample=False) print(“Summary:”, summary[0][‘summary_text’]) |

By using this code, you can ensure a seamless implementation of the text summarization model.

6.Model Training:

Feed this in mind: the more effectively the model will be trained, the better output summaries it will create. The process involves configuring the model and then optimizing its parameters accordingly.

Many functions need to be performed in this regard. For instance, the loss function guides the text summarization model to learn from its own mistakes and come up with better results next time.

Here, we are again going to use the BART model. We need to import all of its relevant functions which are: BartForConditionalGeneration, BartTokenizer, Trainer, TrainingArguments.

| from transformers import BartForConditionalGeneration, BartTokenizer, Trainer, TrainingArguments

from datasets import load_dataset # Load dataset dataset = load_dataset(“cnn_dailymail”, “3.0.0”) # Load pre-trained model and tokenizer model = BartForConditionalGeneration.from_pretrained(“facebook/bart-large-cnn”) tokenizer = BartTokenizer.from_pretrained(“facebook/bart-large-cnn”) # Tokenize data def preprocess_function(examples): inputs = [doc for doc in examples[“article”]] model_inputs = tokenizer(inputs, max_length=1024, truncation=True) with tokenizer.as_target_tokenizer(): labels = tokenizer(examples[“highlights”], max_length=128, truncation=True) model_inputs[“labels”] = labels[“input_ids”] return model_inputs tokenized_datasets = dataset.map(preprocess_function, batched=True) # Training arguments training_args = TrainingArguments( output_dir=”./results”, evaluation_strategy=”epoch”, learning_rate=2e-5, per_device_train_batch_size=4, num_train_epochs=3, weight_decay=0.01, save_total_limit=2, ) # Trainer trainer = Trainer( model=model, args=training_args, train_dataset=tokenized_datasets[“train”], eval_dataset=tokenized_datasets[“validation”], ) trainer.train() |

7.Implementation:

Now that your text summarization model is trained – it is now time to implement it. Here are the deep learning frameworks TensorFlow and PyTorch that we installed in the second step above.

Both these frameworks ensure a seamless implementation. Here again, we will use BART, and the code is mentioned below.

| from transformers import BartTokenizer, BartForConditionalGeneration

# Load pre-trained BART model and tokenizer tokenizer = BartTokenizer.from_pretrained(“facebook/bart-large-cnn”) model = BartForConditionalGeneration.from_pretrained(“facebook/bart-large-cnn”) # Input text text = “””Input your text here.””” # Tokenize input inputs = tokenizer.encode(“summarize: ” + text, return_tensors=”pt”, max_length=512, truncation=True) # Generate summary summary_ids = model.generate(inputs, max_length=50, min_length=25, length_penalty=2.0, num_beams=4, early_stopping=True) summary = tokenizer.decode(summary_ids[0], skip_special_tokens=True) print(“Summary:”, summary) |

So, now, all you need to do is combine this code with the above ones and see your developed text summarization working.

However, this isn’t the last step, there are two more that you need to perform.

8.Evaluation:

The name is already telling everything – in this step, you need to evaluate the summarization model to determine its output quality.

For this, the ROUGE metric (a load metric in datasets) can be used to measure the overlap between the output summary and the reference summary to evaluate quality.

| from datasets import load_metric

# Load metric rouge = load_metric(“rouge”) # Example summaries generated_summary = “input text here.” reference_summary = “input text here.” # Compute ROUGE scores scores = rouge.compute(predictions=[generated_summary], references=[reference_summary]) print(“ROUGE Scores:”, scores) |

By performing this step, you will get insights about how good summaries your developed summarization model is generating.

9.Deployment:

Finally, the most loved part of development has arrived. This step refers to making your summarization model accessible to common users.

You can either transform it into an application or a web-based tool. The FastAPI can be used for seamless integration into both web and mobile applications. The integration will allow users to quickly summarize lengthy text on the go.

So, after going through all these ways, you will have an efficient idea of how to build a text summarization application.

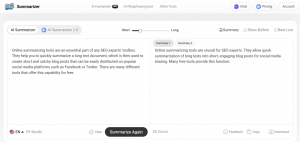

Example of AI Text Summarization App

To demonstrate how exactly your developed model will work, we have found a decent AI-powered text summarization app. It performs abstractive summarization and uses NLP and machine learning technologies for maximum quickness and accuracy.

A screenshot of its input and output is attached below; check it out.

Final Words

Text summarizing apps and tools have become quite popular in recent years. As a developer, you might want or be asked to develop such solutions. In such a situation, don’t panic, as this isn’t a hectic task if you follow the right approach. In this detailed guide, we have explained a step-by-step procedure for developing an AI-backed text summarizing model.